Unsupervised anomaly detection makes it possible to automate tasks relating to the maintenance of equipment and infrastructure, which until now have been carried out by experts.

One of the features of the BL.Predict equipment management platform is the ability to manually define alert thresholds so that you are warned when the systems being monitored break down. To do this, each case of failure must be referenced using specific criteria that can be traced in the measurements taken by the sensors.

However, it is difficult to know the full list of possible faults. More often than not, we have to wait to observe them (and therefore suffer the consequences) before formalising them… which can be costly. Unsupervised anomaly detection could alleviate this problem by automatically spotting unexpected behaviour and informing the operator directly.

But what is anomaly detection?

Anomaly detection aims to highlight suspicious behaviour or degradations in operation based on the analysis of system data. It seeks to detect symptoms and is therefore different from diagnosis, which identifies the causes.

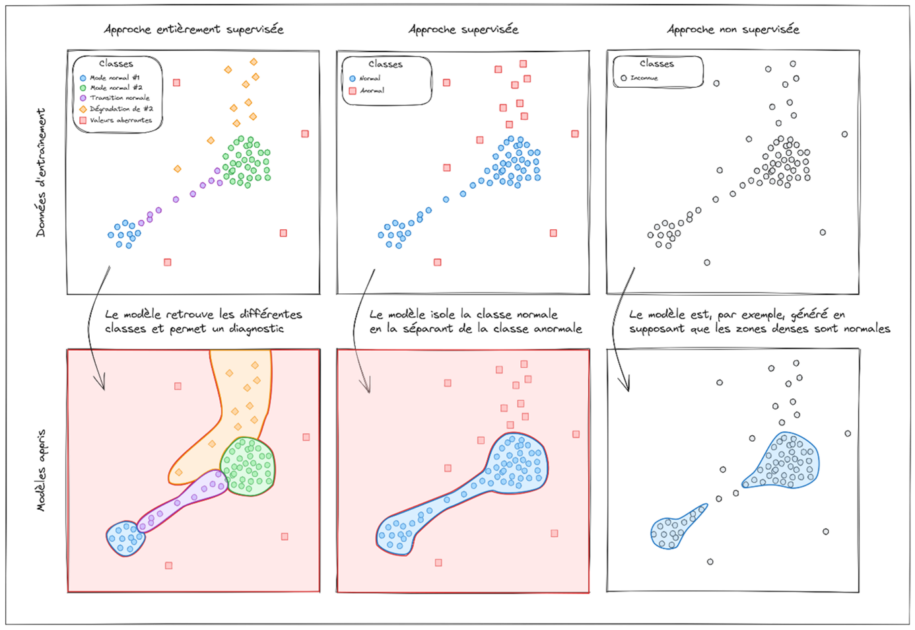

Anomaly detection is divided into two categories:

- Supervised detection involves learning from labelled data, i.e. measurements that are labelled ‘normal’ or ‘abnormal’. This detection approach generates a model that separates normal measurements from abnormal measurements. Sometimes it can even suggest an initial diagnosis.

- Unsupervised detection involves learning from unlabelled data, i.e. a set of measurements whose state is unknown. This detection method creates a model that formulates hypotheses to establish patterns of normality within the data.

In practice, unsupervised methods characterise abnormality by means of an anomaly score; the higher the score, the more likely the data analysed is to characterise a potential fault.

What are the special features of BL.Predict?

With BL.Predict, sensors are positioned on equipment to monitor and understand its operation. These sensors collect data streams; time-stamped measurements in real time. These measurements need to be processed as they come in, as abnormal behaviour needs to be detected as early as possible.

The systems studied also have the particularity of evolving over time, following distinct operating modes or receiving a command to modify their behaviour. Anomaly detection models must therefore be able to adapt to these changes, which is not the case with static methods, which are still commonly used.

Our response with BL.Predict

To integrate with BL.Predict, anomaly detection methods must meet the following requirements:

- Be able to adapt quickly to new measurements,Be light in memory (independent of the number of measurements), quick to execute and easy to interpret to facilitate diagnosis after detection,

- Be easy to parameterise to reduce the degree of human supervision required for their deployment and operation,

- Offer understandable performance metrics,

- Offer the possibility of combining several models into one in order to pool knowledge about similar systems.

Limitations of current approaches

The approaches currently in place take two forms:

- The definition of alerts based on thresholds to obtain personalised monitoring of the correct operation of a system: this can quickly become inflexible when the thresholds to be set are non-linear, dependent on a large number of attributes (multiplication of clauses) or when there is a large number of abnormal behaviours (multiplication of rules).

- The use of a static anomaly detection method called One-Class SVM to ensure the stability of a system’s behaviour: static by nature, it does not allow the evolution of behaviour to be tracked, which is necessary to detect any changes and start a new learning process adapted to the new behaviour of the equipment to be managed.

Technical aspects and benefits of our solution

In order to optimise the management of equipment and infrastructures, several methods can be used to detect anomalies in data flows. More specifically, BL.Predict implements three of these methods, which are capable of adapting to changing behaviour over time:

- The KDE method: based on the kernel density estimation approach. From a set of samples called kernel centres, a model is generated in the form of a function estimating the probability density associated with the data distribution. If a measurement is found in an area of the data space where the density is too low, it is considered abnormal. The main limitation of this approach is the choice of kernel centres among the measurements: their number must not depend on the size of the data stream. They are therefore selected subjectively within a sliding window of a fixed size to be defined.

- The DyCF method: based on the Christoffel function, borrowed from the theory of approximation and orthogonal polynomials. It models all the samples in the form of a matrix of fixed size, which depends only on the number of attributes and a method parameter. It defines an anomaly score that captures the support for the probability measure associated with the data set. It is simpler to parameterise than the KDE method, but more difficult to interpret.

- The DyCG method: based on an asymptotic property of the Christoffel function. By setting two DyCF models with different parameters, it further reduces the number of parameters to be set. Although easier to parameterise than the DyCF method, it is also even more difficult to interpret.

By succinctly using these new methods with BL.Predict, we will be able to carry out targeted detection, by proposing new, more flexible thresholds on well-chosen attributes. Going further, we want to use our methods for systemic detection, taking all the attributes of a system as input and then automating the selection of key attributes. The aim here will be to determine abnormal and unexpected behaviour at system level.

Deploying anomaly detection in BL.Predict

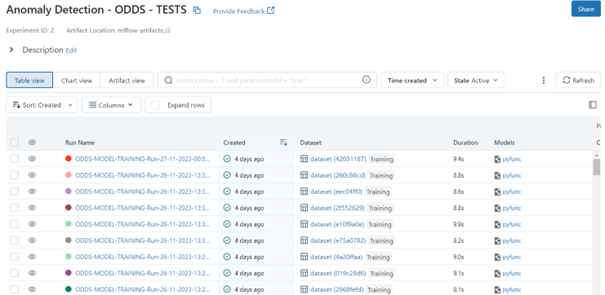

In a system integrating a multitude of sensors, as is the case with BL.Predict, model lifecycle management is crucial for maintaining good equipment performance, constant stability and scalable adaptability of anomaly detection systems.

The MLOps approach is an essential element in coordinating the various phases of the model lifecycle. By adopting this approach, it becomes possible to implement simplified management of models, from their initial training to their deployment, updating and ongoing monitoring. Regular, automated training, update and inference cycles allow models to adjust dynamically to changes in the data. This automation also facilitates monitoring, guaranteeing rapid detection of deviations in the data.

In BL.Predict, we are experimenting with workflow orchestration systems and APIs for process automation, as well as tools for managing the lifecycle of machine learning models. We have carried out experiments on historical intensity and pressure data collected from air handling units.

Ultimately, we expect to be able to raise automatically defined alerts and discover new abnormal or unexpected behaviour.