Latest articles

Evaluating the Energy Impact of Design Decisions in Web Applications

Introduction Software systems represent a significant and growing share of global energy consumption. Recent studies estimate that digital technologies account for approximately 6% of worldwide energy usage, with an annual increase of around 6.2%. This growing concern requires organizations, such as Berger-Levrault, to measure and reduce the environmental footprint of their software systems. During software development, architects explore various design solutions, including architectural styles (e.g., monolithic, microservices), programming languages (e.g.,

Yearbook Research & Innovation 2025: Governed, Responsible, and reality-based Research!

We are proud to announce the publication of our 2025 Research & Innovation Yearbook! More than just an annual review, this document marks a structural shift in the way we design and conduct research. Innovation is no longer seen as a simple technological promise, but as a demanding discipline, confronted with the constraints of reality

Angular or React: Which One Consumes Less Energy?

When developers choose a frontend framework, the usual criteria come to mind: performance, ecosystem, maintainability, developer experience, and community support. But there is a factor that is almost never discussed: energy consumption! As software systems grow in scale, the environmental impact of digital technologies is becoming impossible to ignore. While green software engineering research has

Financing Long-Term Innovation: A Strategic Lever for Research at Berger-Levrault

A Strong Commitment to Innovation As a leading industrial company in the field of software and digital services for local authorities and public-sector stakeholders, Berger-Levrault places innovation at the heart of its development strategy. In a context of accelerated digital transformation, anticipating future uses, exploring new technologies, and preparing tomorrow’s solutions have become key challenges

Circular economy and Collaborative Networks: The Future of Asset Management

The global economy is shifting toward sustainability, driven by environmental urgency, regulatory demands, and evolving consumer expectations. Businesses are increasingly adopting circular economy principles, moving away from the linear “take, make, waste” model to systems that extend product lifecycles, minimize waste, and optimize resource use [Rejeb et al., 2025]. This transition aligns with the rise

GitHub Copilot in an R&D Context: Experimentation Feedback and Usage Analysis

After nearly a year of daily use of GitHub Copilot in a software research and development context, here is a feedback report based on my real-world experience. I work as a full-stack developer on projects involving Python, TypeScript (Angular), as well as more transversal topics such as documentation, configuration, and DevOps. Over the months, GitHub

Migrating Katalon Studio Tests to Playwright with Model Driven Engineering

Introduction Migrating automated tests from one platform to another is a common challenge for software teams. In our recent project at Berger-Levrault, we tackled the migration of functional test cases from Katalon Studio to Playwright, aiming to minimize manual effort and maximize reliability. This post shares our semi-automated approach, key results, and lessons learned. Background:

Camille Dupré Ph.D. thesis defense: “Pad-based Interaction in Mixed Reality environments”

Thursday 18th December at 2p.m. Paris time, Camille Dupré, Ph.D. Candidate has defended her thesis named “Pad-based Interaction in Mixed Reality environments”. Her thesis defense took place at the LISN, in Gif-sur-Yvette (660 Av. des Sciences Bâtiment, 91190), France. Take a look at the summary below. Summary Mixed Reality (MR) environments integrate virtual elements into

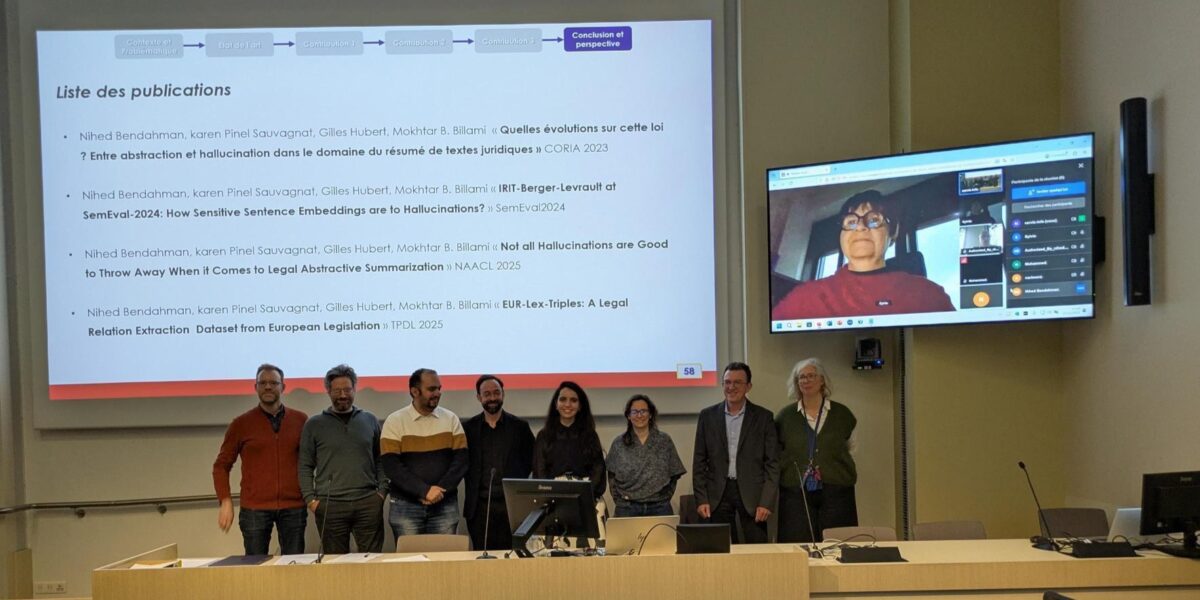

Nihed Bendahman Ph.D. thesis defense: “Evaluation and mitigation of hallucinations in automatic summarization in the specific context of legal documents”

Monday 15th December at 2p.m. Paris time, Nihed Bendahman, Ph.D. Candidate has defended her thesis named “Evaluation and mitigation of hallucinations in automatic summarization in the specific context of legal documents”. Her thesis defense took place at the IRIT Research Laboratory, in Toulouse, France. Take a look at the summary below. Summary Legal monitoring is a

When business-oriented software meets real life: what SEDIT teaches us

In our first article, we discussed the differences between “ideal scenarios” and actual work practices, and what these differences mean for the design of business-oriented software. In this second installment, we move from theory to practice: how did we measure these differences using SEDIT, an HRIS system from Berger-Levrault used in public administrations? The general

Usage discrepancies: when business software meets real life

Do users really follow the paths carefully mapped out by designers? And when reality goes beyond the scope of the design—which happens more often than we think—how can we know this other than through intuition and assumptions? On a daily basis, business-oriented software supports very concrete activities: managing leave, processing absences, tracking careers, producing regulatory

PMS 2026 – 34th International Conference on Program Comprehension (Toulouse – France)

ICPC – 34th International Conference on Program Comprehension (Rio de Janeiro – Brazil)

CHI 2026 – International conference on Human-Computer Interaction (Barcelona – Spain)

Forum for advances in visualization and visual analytics – VIS 2025 (Vienna – Austria)

Yearbook Research & Innovation 2025: Governed, Responsible, and reality-based Research!

We are proud to announce the publication of our 2025 Research & Innovation Yearbook! More than just an annual review, this document marks a structural shift in the way we design and conduct research. Innovation is no longer seen as a simple technological promise, but as a demanding discipline, confronted with the constraints of reality

Camille Dupré Ph.D. thesis defense: “Pad-based Interaction in Mixed Reality environments”

Thursday 18th December at 2p.m. Paris time, Camille Dupré, Ph.D. Candidate has defended her thesis named “Pad-based Interaction in Mixed Reality environments”. Her thesis defense took place at the LISN, in Gif-sur-Yvette (660 Av. des Sciences Bâtiment, 91190), France. Take a look at the summary below. Summary Mixed Reality (MR) environments integrate virtual elements into

Nihed Bendahman Ph.D. thesis defense: “Evaluation and mitigation of hallucinations in automatic summarization in the specific context of legal documents”

Monday 15th December at 2p.m. Paris time, Nihed Bendahman, Ph.D. Candidate has defended her thesis named “Evaluation and mitigation of hallucinations in automatic summarization in the specific context of legal documents”. Her thesis defense took place at the IRIT Research Laboratory, in Toulouse, France. Take a look at the summary below. Summary Legal monitoring is a

Gabriel Darbord Ph.D. thesis defense: “Automatic test generation to help modernize our applications”

Friday 5th December at 9a.m. Paris time, Gabriel Darbord, Ph.D. Candidate has defended his thesis named “Automatic test generation to help modernize our applications”. His thesis defense took place in Lille, France. Take a look at the summary below. This thesis is fully in line with the partnership between Berger-Levrault and Inria, which aims to

Berger-Levrault strengthens its ties with AI startups!

We are proud to announce that we have joined Hub France IA, the largest association dedicated to artificial intelligence in France. This network now brings together more than 250 members—companies, startups, research laboratories, and institutions—who share the same goal: to accelerate the development and adoption of AI in France and Europe. Getting closer to the

Celebrating New PhDs from the BL.Research Team!

At Berger-Levrault, research is more than a mission—it’s a shared adventure. As the new academic year begins, we are proud to celebrate the success of four of our colleagues from the BL.Research team, who have reached a major milestone in their scientific journeys: the defense of their doctoral theses. These achievements are the result of

Hamza Safri Ph.D. thesis defense: “Federated learning for the IoT : Application for Industry 4.0”

Thuesday 24th June at 3pm Paris time, Hamza Safri, Ph.D. Candidate has defended his thesis named “Federated learning for the IoT: Application for Industry 4.0”. His thesis defense took place at the Inria Minatec Grenoble, Grenoble, France. Take a look at the summary below. Keywords: Model generalization, predictive maintenance, industrial IoT, federated learning, edge network,

ESUG 2025: Six contributions and 2 prizes for the Software Engineering Lab team!

Congratulations to our PhDs Nicolas Hlad, Aless Hosry, Benoit Verhaeghe and Pascal Zaragoza from Berger-Levrault’s BL.Research team for their participation in the 2025 edition of the ESUG (European Smalltalk User Group) conference! This year’s conference took place from July 1-4 in Gdansk, Poland. The theme was innovation in Smalltalk technologies and their use in software