Context

Ontologies are widely used in information retrieval (IR), Questions/Answers, and decision support systems, and have gained recognition as they are now being considered the new answer to semantic interoperability in modern computer systems and the next big solution for knowledge representation. The structuring and management of knowledge are at the heart of the concerns of the scientific communities, and the exponential increase in structured, semi-structured, and unstructured data on the Web has made the automatic acquisition of ontologies from texts a very important domain of research.

More specifically, an ontology can be defined with concepts, relationships, hierarchies of concepts and relationships, and axioms for a given domain. However, building large ontologies manually is extremely labor-intensive and time-consuming. Hence the motivation behind our project: the automatization of the process of building a specific domain ontology.

Methodology

Berger-Levrault group offers more than 200 books and hundreds of articles with legal and practical expertise on the Légibases portal. The books collection is thematic, partially annotated, and fully edited by Berger-Levrault and experts.

This portal covers 8 domains:

Global word count in our database:

50934108

Number of unique words per line:

600349

Number of unique words:

220959

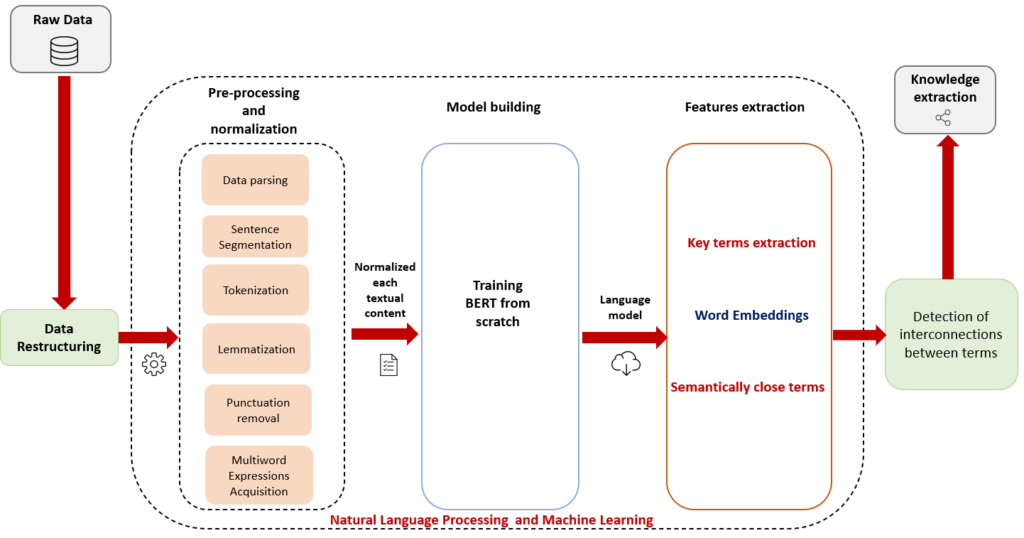

To achieve our goal, we approached the tasks by turning to Natural language processings tools and data mining techniques. Here’s a figure summarizing the processing carried out to bring out the key terms semantically close out of Berger-Levrault’s documents:

Data retrieval and restructuring

As the first step of our approach, we took over the Raw Data (RD) stored on our SQL database and performed a restructuring task with in order to obtain an organized set of HTML documents, and therefore be able to exploit its content. Note that at this point, we identify the terms needed for our ontology learning process as the key terms annotated by the experts in each paragraph of each document. The result are a set of 172 HTML documents in French language published by the Berger-Levrault Group with 8 Legibases : legal articles containing mentions relating to one of 8 areas of the public sector (Civil Status & Cemeteries, Elections, Public Procurement, Town Planning, Local Accounting & Finance, Territorial HR, Justice, Health).

Pre-processing and normalization

Once the required format is obtained, we start up the pre-processing pipeline that goes as the following:

- Data scrapping first step is to parse the HTML documents, extract specific bricks (parts) and keywords, and save the result on a text file.

- Sentence segmentation: breaking the text apart into separate sentences. which is a requirement for further processing steps.

- Lemmatization: in most languages, words appear in different forms. Look at these two sentences: « Les députés votent l’abolition de la monarchie constitutionnelle en France. » « Le député vote l’abolition de la monarchie constitutionnelle en France. » Both sentences talk about “député”, but they are using different inflections. When working with texts in a computer, it is helpful to know the base form of each word so that you know that both sentences are talking about the same meaning and same concept “député”. This will especially come in handy during training word embeddings.

- Multiword Expressions (MWE) Acquisition: replacement of whitespaces in multiword expressions by an underscore “_”, so that the term would be considered as a single token and therefore a single vector embedding will be generated for it instead of two or more vectors for each word that is part of it.

To prepare the text content for embeddings training, we generate a raw text file, from HTML documents, containing one sentence per line with unified keywords (same representation).

Model Building

Now that we have a normalized text file, we can launch the training of the state-of-the-art Natural Language Understanding model BERT on our text file using Amazon Web Services infrastructure (Sagemaker + S3). To achieve this purpose, we go through the following steps :

- Learning a new vocabulary that we will use to represent our dataset. The BERT paper uses a WordPiece tokenizer, which is not available in opensource. Instead, we will be using SentencePiece tokenizer in unigram mode. SentencePiece creates two files: tokenizer.model and tokenizer.vocab.

- With the vocabulary at hand, we generate pre-training data for the BERT model. We call create_pretraining_data.py script from the BERT repo and create Tensorflow records.

- We trained our model using Google's Tensorflow machine learning framework on Amazon Web Services infrastructure (Sagemaker + S3) with standard settings.

- We used a batch size of 1024 for sequence length 128 and 30k steps with sequence length 512. Training took about a day.

At the end of this step, we have our Bert model trained on our corpus from scratch that we can use for word embedding’s generation.

Features extraction

There’s a suite of available options to run BERT model with Pytorch and Tensorflow. But to make it easy to get our hands on our model, we went with Bert-as-a-service : a Python library that enables us to deploy pre-trained BERT models in our local machine and run inference.

We run a Python script from which we use the BERT service to encode our words into word embedding. Given that, we just have to import the BERT-client library and create an instance of the client class. Once we do that, we can feed the list of words or sentences that we want to encode.

Now that we have the vectors of every word of our text file, we will use scikit-learn implementation of cosine similarity between word embedding to help determine how close they are related.

Here is an overview of the frequency distribution for some terms present in our editorial base:

After obtaining Cosine similarity scores between two given terms, we build a CSV file that contains the top 100 frequent key terms in our editorial base, their 50 closest words, as well as their similarity scores. The figure below shows the 100 most frequent terms:

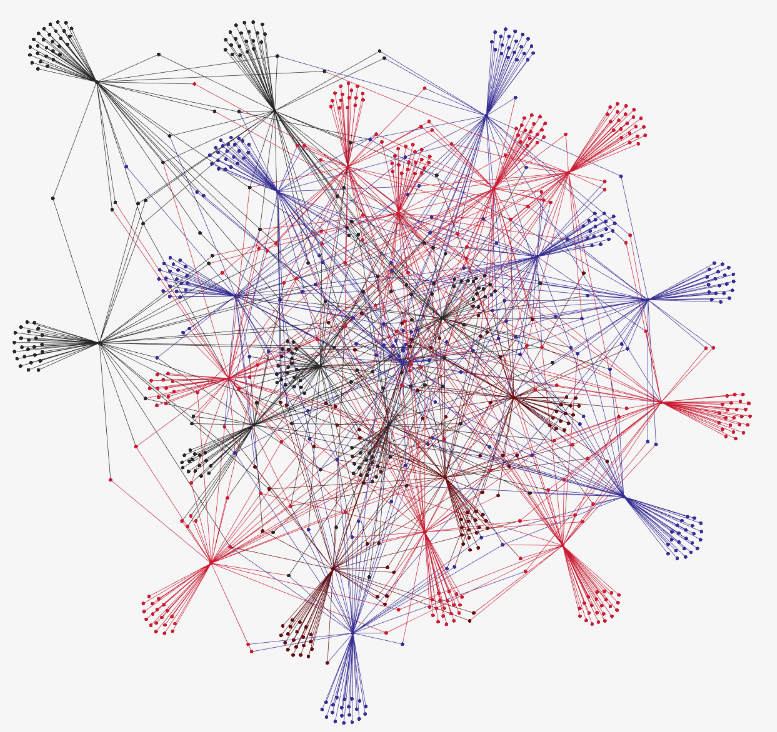

The file is then presented as an input to create the following labeled graph representing the semantic dependencies obtained from the previous steps:

Below the most important characteristics of the graph:

| Nombre total des termes clés | 130269 |

|---|---|

| Nombre de termes les plus pertinents | 100 |

| Le score le plus élevé | 0.9528004 |

| Le score le moins élevé | 0.4424540 |

| Nombre des termes singuliers | 60 |

| Nombre des termes composés | 40 |

Key figures in the graph

PERSPECTIVE

We remind that we present here the first work that we carried out for the study of the corpus of the editorial base and that the continuation of this work will lead us to apply techniques of extraction of concepts and relations using different levels of analysis, namely: linguistic, statistical and semantic level, and the training of a classifier as a concept prediction tool.