In the critical domain of Computerized Maintenance Management Systems (CMMS), solutions like CARL Source empower businesses to efficiently create, schedule, and manage maintenance tasks. These platforms are widely used to coordinate work orders for repairing industrial machinery across multiple factories worldwide.

*Update: Coruscant is the name given internally to this R&D project. The commercial name associated with the product is now CARL AI Gen.

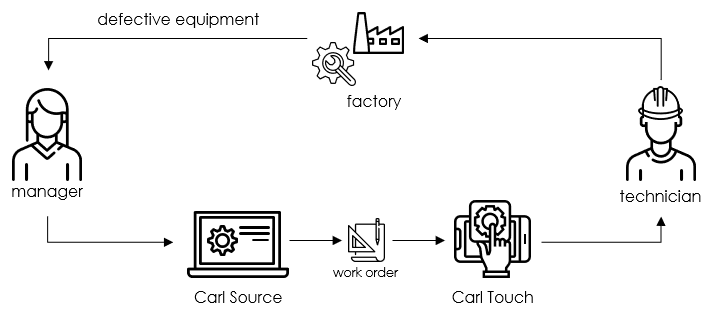

Traditionally, when a piece of equipment breaks down, the issue is reported to a maintenance manager, who then logs the failure in a CMMS tool like CARL Source. The system generates a work order, which is subsequently assigned to a qualified technician responsible for on-site repairs. This structured approach ensures that maintenance operations are documented, prioritized, and executed systematically.

However, as industrial environments become more complex and equipment networks grow, the need for automation and intelligent decision-making in maintenance management is increasing. AI-driven solutions offer the potential to enhance efficiency, optimize resource allocation, and reduce equipment downtime, paving the way for a more proactive and data-driven approach to maintenance operations.

This lifecycle (Figure 1) outlines the entire process of maintenance management, from the initial detection of faulty equipment to the final repair and validation of the intervention. Understanding this workflow is essential for integrating AI-driven automation, as it highlights key stages where intelligent agents can assist—from fault diagnosis and work order generation to task prioritization and technician dispatching.

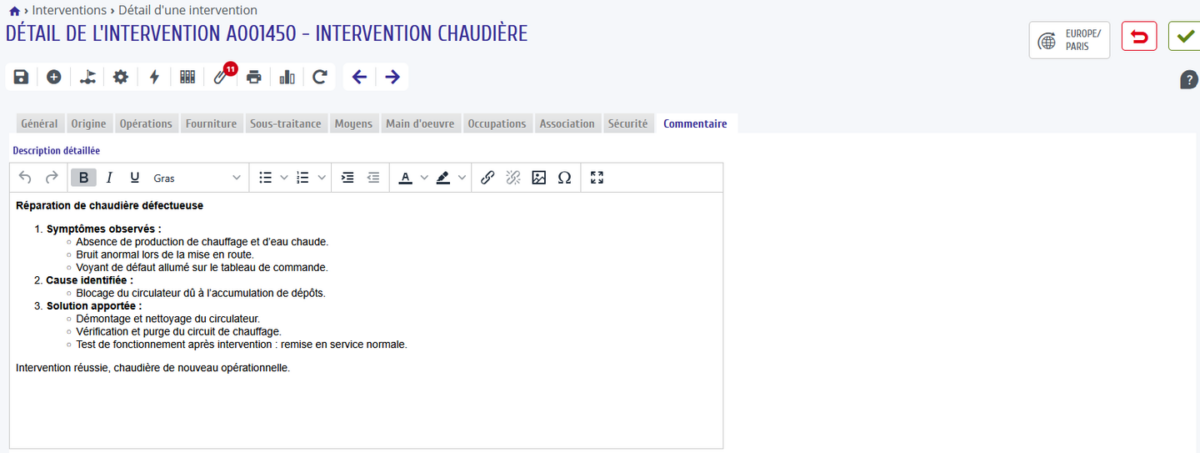

In this context, maintenance managers and technicians frequently interact with interfaces containing extensive forms for structured data entry (see Figure 2). These interfaces are designed to capture crucial details about each intervention, including equipment identification, fault description, intervention scheduling, resource allocation, and compliance tracking.

While such structured data is essential for traceability, reporting, and analytics, manually filling out these forms can be time-consuming, error-prone, and cognitively demanding. Technicians in the field may need to enter data quickly, while managers must ensure that all records are complete, standardized, and compliant with operational guidelines.

This is where AI-driven assistance can bring significant value—by automating data entry, pre-filling fields based on historical interventions, and providing intelligent recommendations. Such innovations can reduce administrative burden, enhance data accuracy, and improve overall efficiency in maintenance workflows.

While structured forms are essential for capturing key data and supporting informed decision-making, they can also hinder the user experience by making the interface more rigid and cumbersome to navigate. Users, particularly technicians in the field, often encounter situations where the available form fields do not fully accommodate the complexity of their input.

As a result, they frequently resort to free-form text fields—such as comment sections—to record critical information that does not fit neatly into predefined categories (see Figure 3). This includes details like symptoms observed, potential failure causes, and repair actions taken.

While this workaround allows technicians to express information more naturally, it significantly reduces data usability in subsequent processes. Free-form text entries are difficult to structure, analyze, and leverage for reporting, predictive maintenance, and decision automation. In the case of Figure 3, instead of using the structured forms provided in Figure 2, the technician opts to document key intervention details in a comment section, making it harder for the system to extract actionable insights.

Additionally, these comments often contain valuable contextual information that could be useful to future technicians performing additional repairs. However, current tools lack robust search functionality, making it nearly impossible to efficiently retrieve relevant information from both free-text comments and structured data fields. This limitation is further exacerbated by mobile constraints, as many technicians only have access to phones or tablets, making searching, navigation, and data retrieval even more difficult.

At Berger-Levrault, we have begun exploring AI and agentic AI as a way to address these challenges and enhance maintenance workflows. By integrating AI-driven solutions, we aim to:

- Extract and structure key insights from free-text comments, making them accessible for future interventions.

- Enable intelligent search across both structured and unstructured data, allowing technicians to quickly find relevant maintenance history.

- Enhance user experience on mobile devices, offering AI-powered assistance for better navigation, retrieval, and contextual recommendations.

By bridging the gap between structured and unstructured data, AI-driven solutions have the potential to streamline maintenance operations, reduce intervention times, and improve collaboration among technicians—ensuring that critical information is never lost and always actionable.

1. Understanding Agentic AI: The Foundations of Autonomous AI Agents

Agentic AI, or generative AI agents, refers to applications designed to observe the environment of specific business applications, interpret information, and take action to achieve specific goals. Unlike traditional AI models that passively generate responses, AI agents operate autonomously, leveraging structured processes to interact with their environment.

At the core of an AI agent lie four fundamental components that enable reasoning, adaptability, and action:

1.1. Models: The Cognitive Engine of AI Agents

Language models (LLM) serve as the foundation of Agentic AI, providing capabilities for:

- Understanding and generating text, ensuring meaningful interactions.

- Reasoning, allowing the agent to structure complex tasks.

- Interacting with external tools, extending the agent’s abilities beyond simple text processing.

To optimize cost and efficiency, different LLM can be selected for distinct tasks—for example, using lightweight models for simple queries and more advanced models for complex reasoning or planning.

1.2. Tools: Extending AI Agents Beyond Text

AI agents are not confined to text-based interactions—they integrate external tools to:

- Retrieve and analyze real-world information (e.g., databases, APIs, real-time monitoring systems).

- Take action within digital environments, such as executing commands, automating workflows, or modifying records in a system.

By leveraging these tools, AI agents become active participants in their ecosystem rather than passive response generators.

1.3. Memory: Ensuring Continuity and Context Awareness

Memory is more than just data storage—it enables AI agents to:

- Recall past interactions, maintaining context across multiple exchanges.

- Adapt dynamically to evolving situations, refining responses over time.

- Enhance decision-making by integrating historical insights into new actions.

Memory is essential for temporal coherence, ensuring that AI agents can operate seamlessly across long-term tasks without losing track of previous discussions or actions.

1.4. Orchestration: The Backbone of AI Agent Autonomy

Orchestration serves as the central control mechanism of an AI agent, ensuring smooth operation by:

- Managing interactions between models and tools, determining when and how they are used.

- Handling context and memory, ensuring that past information is leveraged effectively.

- Coordinating workflows, structuring complex tasks into manageable steps.

Orchestration is what allows AI agents to think and execute multi-step processes autonomously, making them powerful assistants for complex real-world applications.

By combining these four elements—models, tools, memory, and orchestration—Agentic AI transforms traditional AI from a passive question-answering system into an active, goal-oriented assistant. This ability to observe, reason, and take action makes it a game-changer for automation, decision-making, and process optimization across industries.

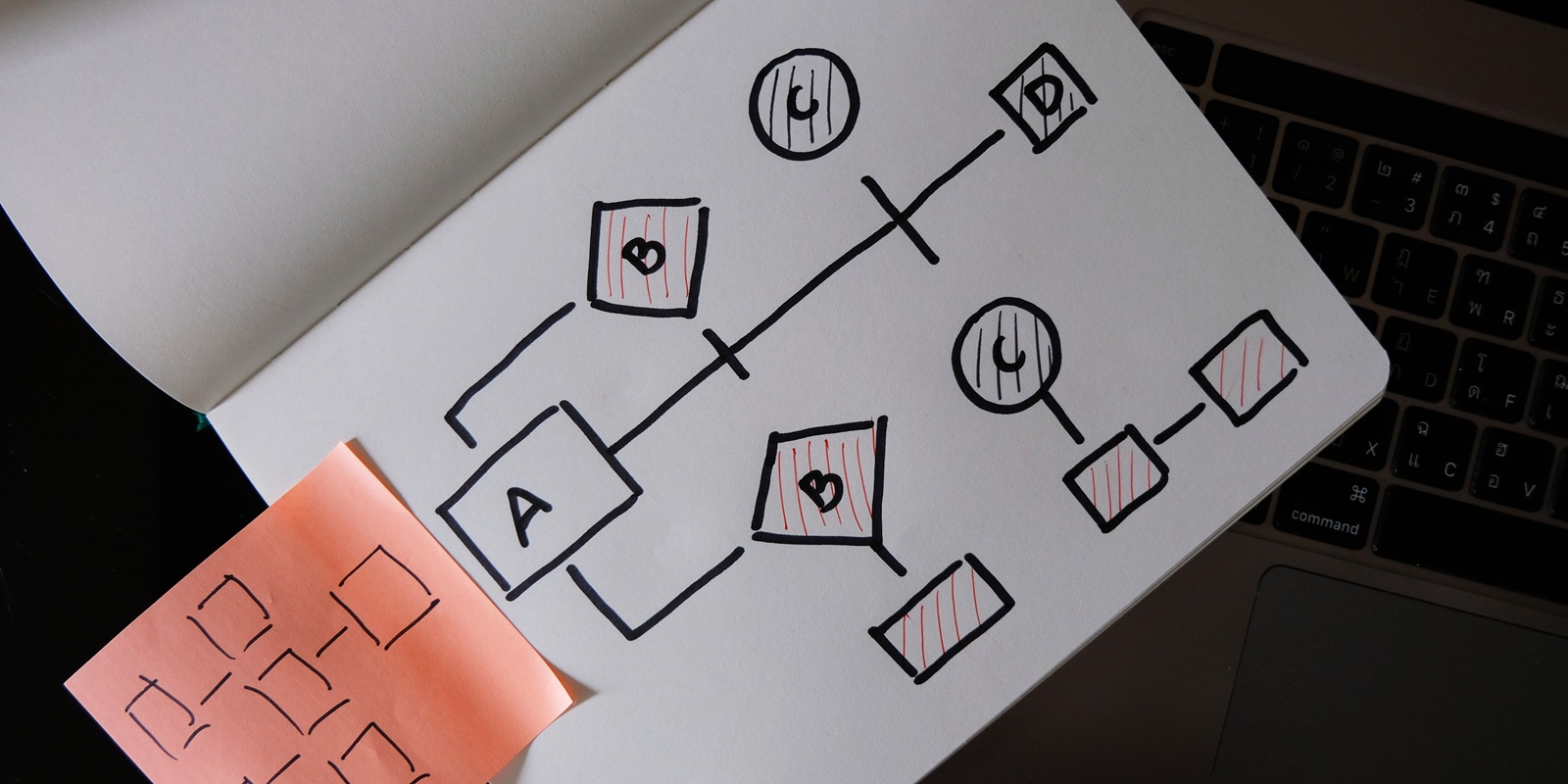

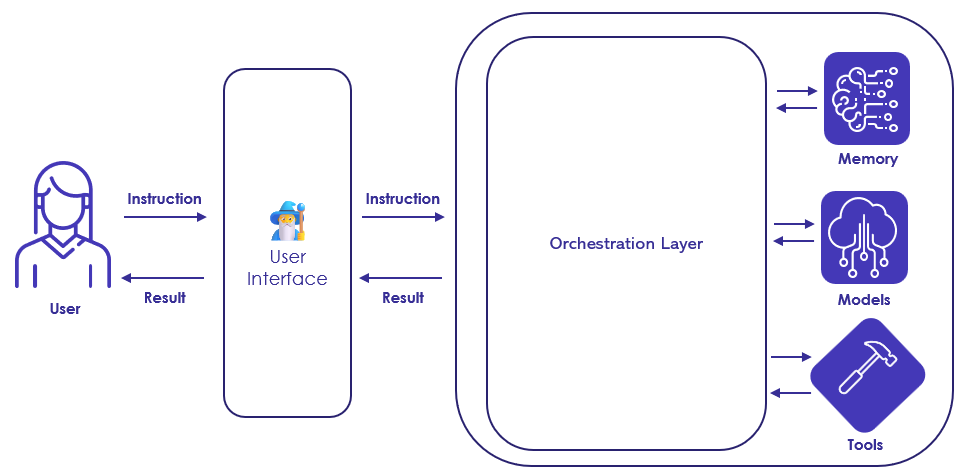

The interaction between AI models, tools, and memory is structured through the orchestration layer, which plays a central role in processing user requests (see Figure 4). This diagram illustrates how a user interacts with the AI system via the user interface, sending instructions that are then processed by the orchestration layer. The orchestration mechanism efficiently manages and coordinates memory, models, and tools to generate a response, ensuring a structured and optimized workflow.

2. CARL AI Gen* Agent: Enhancing Technician Efficiency with AI Assistance

2.1. Introducing Coruscant Agent: AI-Powered Information Retrieval

To bridge the gap between information availability and accessibility, we developed CARL AI Gen, an AI-driven assistant designed to streamline the retrieval of relevant, context-aware data for technicians.

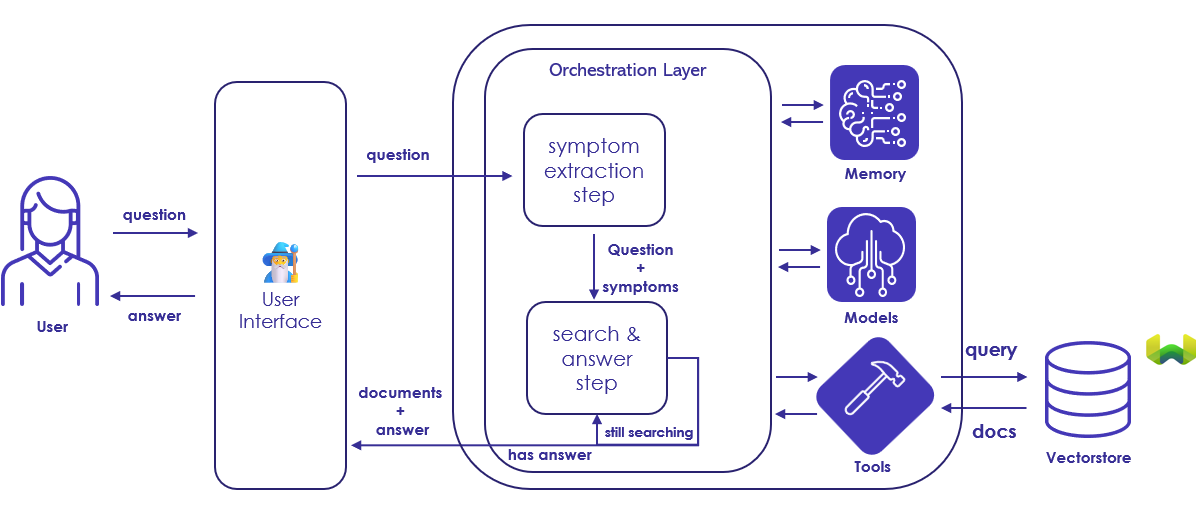

As illustrated in Figure 5 (see below), CARL AI Gen Agent operates through a two-step process that applies semantic search across multiple data sources within CARL Source:

- Symptom Extraction:

- The AI analyzes the technician’s query to identify relevant symptoms, keywords, and contextual clues.

- These extracted elements refine the search, ensuring a more targeted and precise retrieval of relevant documents.

- Search & Answer Generation:

- The agent performs semantic searches across work orders, manuals, past interventions, and technician notes, retrieving the most relevant data.

- If a direct answer is found, it is immediately provided; otherwise, the system continues searching across available sources.

By leveraging CARL AI Gen Agent, technicians can quickly access the most relevant insights, reducing downtime, improving decision-making, and ultimately enhancing the efficiency of maintenance operations.

2.2. Preprocessing Data for Coruscant Agent

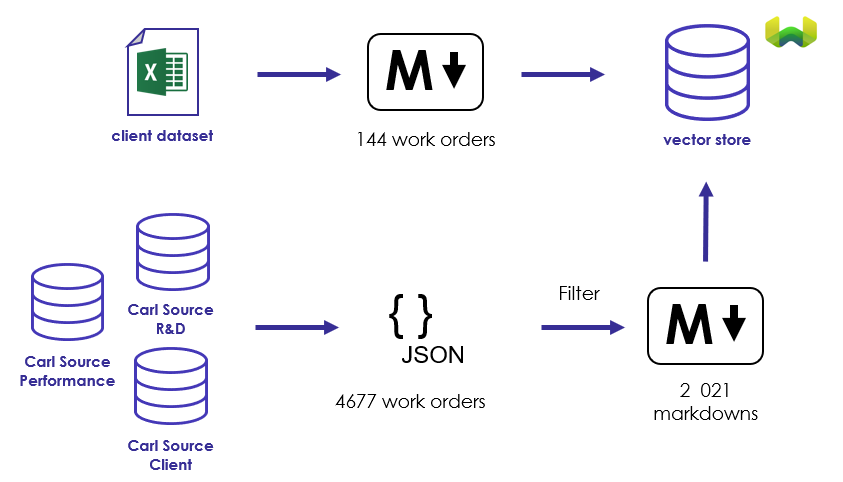

Before implementing CARL AI Gen Agent, we first needed to extract and preprocess text-rich data from multiple CARL Source test databases (see Figure 6). This step was essential to ensure that the AI agent could efficiently retrieve and analyze relevant maintenance information.

To create a comprehensive dataset, we incorporated:

- 4,677 work orders extracted from various CARL Source test databases.

- A smaller dataset of 144 real work orders, enriching our data with real-world maintenance cases.

Once the data was collected, we applied a destructuring process to transform each work order into a text-rich markdown format, making it more suitable for semantic search and AI-driven retrieval. Finally, we generated a semantic embedding for each markdown entry, allowing the data to be indexed into our vector store for fast and intelligent querying.

This preprocessing step laid the groundwork for CARL AI Gen Agent’s ability to perform contextual searches, ensuring that technicians receive highly relevant, structured responses to their queries.

3. Evaluating CARL AI Gen

Having established a simple agentic application, we wanted to evaluate two aspects of our agent:

- It’s ability to retrieve the correct document given a question,

- it’s ability to give the correct answer to a question based on the information within the document.

To do so we set up an evaluation dataset, using the various internal CARL Source database, and a small sample of client work orders. For this effect we took the client data of 144 work orders, and:

- extracted the identified problem from the technician comments

- extracted the solution that was applied by the technician

- generated a question based on the extracted identified problem

- generated the ideal response from the extracted applied solution

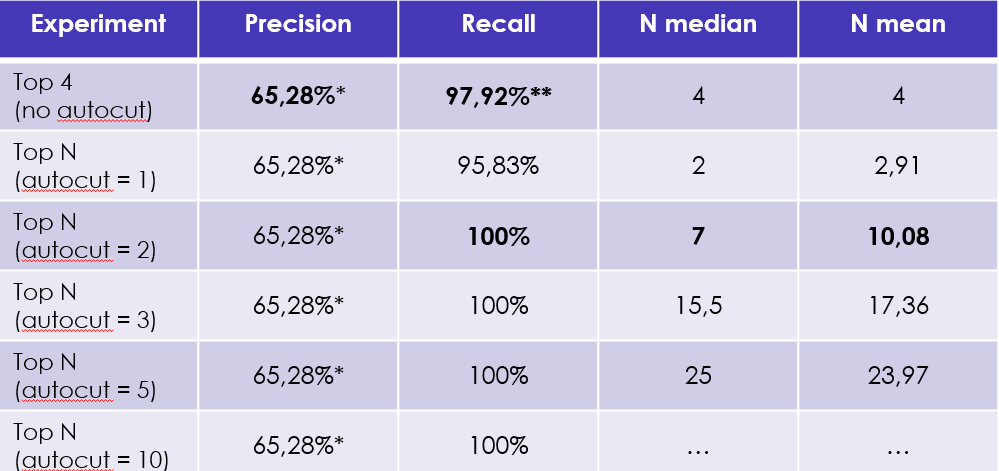

From there, we used the existing document retrieval engine and fed it the set of 144 generated questions and stored the top 4 documents returned by the engine. We then calculated the precision and the recall of the document retrieval engine using the following metric:

- precision: the ability of the engine to return the markdown of the work order that was used to generate the question in the #1 position

- recall: the ability of the engine to return the markdown of the work order that was used to generate the question in the top 4 documents returned.

The results of this initial experiment indicate that we achieved a precision of 65.28% and a recall of 97.92%. Despite the limited sample size, we can conclude that the document retrieval process is effective, successfully returning the most relevant document in nearly all instances.

In our pursuit of achieving a perfect recall rate, we sought methods to dynamically enhance the quantity of retrieved documents based on their semantic relevance to the original query. To accomplish this, we investigated the autocut parameter to determine its impact on recall improvement. By adjusting the autocut value to 2, we successfully attained a recall rate of 100%.

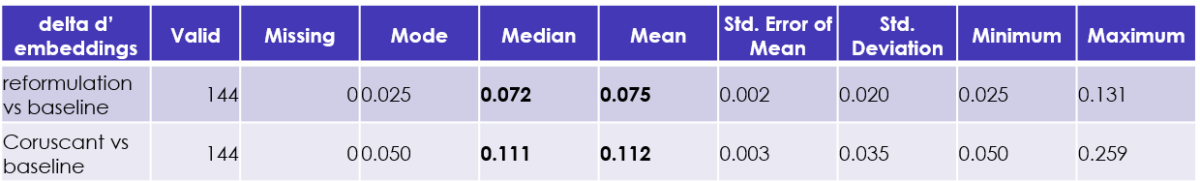

For the second aspect we wish to evaluate, we had our agent run on the set of 144 generated questions and retrieved its final answer. To begin the evaluation of the quality of the responses provided by CARL AI Gen, we propose to compare its semantic value with that of the solution drafted by the technician. The idea being that, if the answer provided by the agent is similar semantically, then it must have chosen the write document to compose an answer similar to that which was provided by the technician.

We generated semantic embeddings for:

- The generated ideal response (designated as reformulation).

- The solution provided by the technician (designated as “baseline”).

- The RAG solution (top 4) from Coruscant (designated as “CARL AI Gen”).

We then calculated the cosine distance between:

- The reformulation and the baseline.

- CARL AI Gen and the baseline.

The statistical description is presented below (for reference, a lower value is better):

Looking at Figure 8, we observe a very close low difference between both reformulation and CARL AI Gen when compared to the baseline. As a reminder, the closer to 0, the closer the two texts are semantically. Inversely, the close to 1, the father these two texts are semantically. In this case, our initial results indicate that Coruscant Agent is able to generate an answer that’s semantically close to the solution written by the technician.

Conclusion and future direction

This initial proof-of-concept and its evaluation show a promising start to the development of the CARL AI Gen Agent. The results indicate that the agent can effectively retrieve relevant documents and generate semantically similar responses to those provided by technicians. This capability not only enhances the efficiency of maintenance operations but also empowers technicians with the information they need to perform their tasks more effectively.

Looking ahead, there are several exciting perspectives for the CARL AI Gen Agent:

- Task Decomposition: The agent can be further developed to break down complex maintenance tasks into simpler, manageable components, allowing technicians to tackle issues systematically.

- Symptom Extraction: Enhancing the agent’s ability to extract symptoms from technician comments and work orders can lead to more accurate diagnostics and quicker resolutions.

- From Simple to Complex Queries: The agent can evolve to handle increasingly complex queries, enabling technicians to ask nuanced questions and receive detailed, context-aware responses.

- Workflow Integration: Future iterations of the agent could integrate seamlessly into existing workflows, providing real-time assistance and recommendations based on the current context of the technician’s work.

By pursuing these directions, the CARL AI Gen Agent can significantly improve the maintenance management process, ultimately leading to enhanced operational efficiency and reduced downtime for machinery.