Large urban agglomerations nowadays are facing some major issues such as economic restrictions, environmental challenges, global and systemic approaches in city management [8]. One of them is to precisely monitor urban objects which can be natural (trees), artificial (traffic lights or poles), static or moving (cars). This is essential to analyze their mutual interaction (for example branches of a tree which are close to an electric pole) and to prevent risks associated with them (for example, dead parts of a tree which may fall on a street). Thus being able to localize urban objects and provide informations about their status is essential.

There is too many objects for manual approaches ex Montpellier (France):

- 282 000 inhabitants (2015)

- >1000 km roads

- 2450 streets-> 5 to 8 000 signs

- ~240km de cables (60 km tramway)

- ~ 40 000 trees

LiDAR dynamic acquisition mounted on terrestrial devices

The LiDAR sensors, which are now usually mounted on mobile devices such as cars, can be used to perform dynamic acquisition of an entire scene such as a city or an agglomeration [4]. Such an acquisition gives more 3D spatial context and precision about the depth than a video camera acquisition.

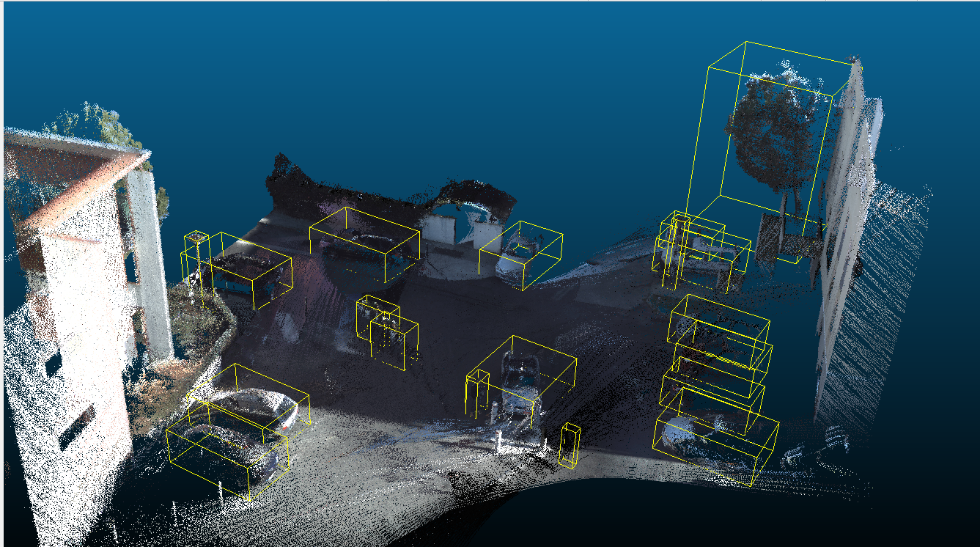

From a LiDAR point cloud it would be possible to detect the objects in the 3D scene to help to manage an agglomeration. For example, knowing precisely how many trees or poles there are, and where they are located would greatly help updating urban objects databases, finding them and monitoring their status. It is especially interesting to track objects which are undergoing constant changes, and the most notable example are living objects like trees. Mobile LiDAR devices are equipped with GNSS (Global Navigation Satellite System) transmitters that Geo-reference the data during the acquisition. Thus any urban objects extracted from the point cloud can be projected to an existing GIS (Geographic Information System).

>Furthermore, by performing those acquisitions on a daily or weekly basis, for example with LiDAR mounted on garbage trucks, city managers would be able to have a clearer picture of the infrastructures and objects evolution over time. As a consequence they would have more insights on which particular situations require their attention and where precisely on the map. To be able to deliver such promises, we have to tackle this project’s biggest technical challenge which is being able to automatically extract information from the points clouds, in other words 3D pattern recognition.

Pattern recognition applied to 3D LiDAR points clouds

Pattern recognition has entered an era of complete renewal with the development of deep-learning [1] in the academic community as well as in the industrial world, due to the significant breakthrough these algorithms have achieved in 2D image processing during the last five years. Currently, most of deep learning researches still come from 2D imagery with challenges such as ILSVRC [2]. This sudden boost of performance led to robust and real-time new object detection algorithms like Faster R-CNN [3]. However, generalizing them to 3D data is not a simple task. This is especially true in the case of 3D point cloud where the information is not structured as in meshes.

This project is the subject of Younes Zegaoui’s thesis which started in November 2017 and should be concluded in November 2020. The goal of which is to develop new techniques for 3D shape classification and urban objects localization in large LiDAR acquired scenes.