Article co-authored by : Kilian Bauvent (Lead engineer), Benoit Verhaeghe (Project manager and Techn Lead), Marius Pingaud (Developer on BL.CodeRules and diagram designer), Nicolas Hlad (Blog post author and survey designer)

On the importance of Merge Requests

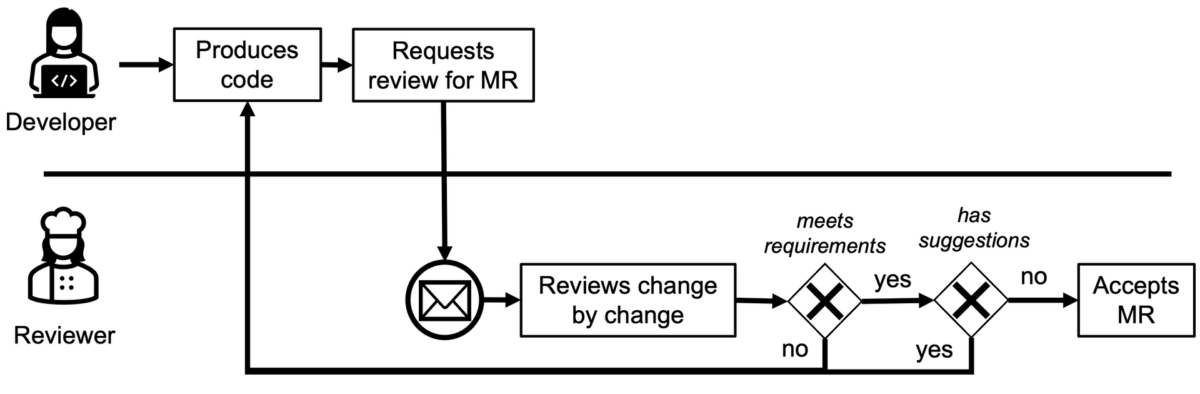

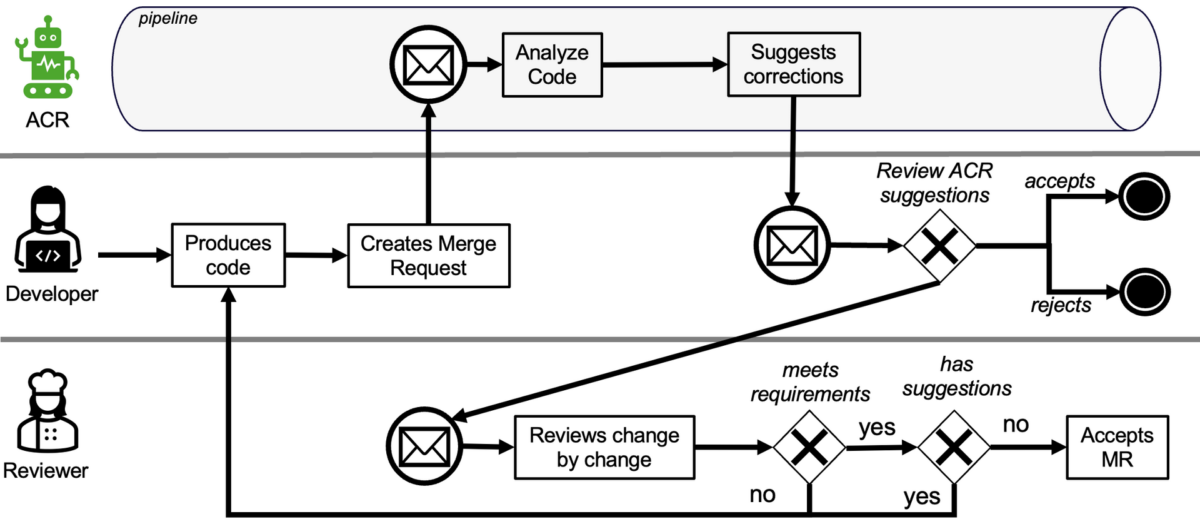

The overall Merge request Process at Berge-Levrault

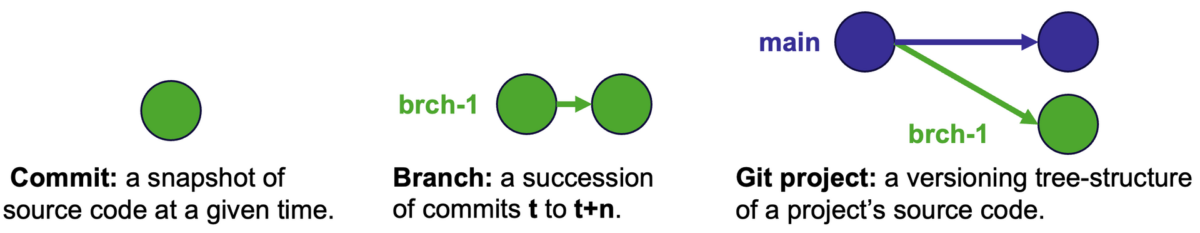

Let us start by giving the big picture. At Berger-Levrault, developers use Git to collaborate on the source code of their different projects. In Git, each code version pushed to a shared repository (i.e., GitLab or GitHub) is captured inside a commit. The commit is essentially a snapshot of the source code version made by a developer.

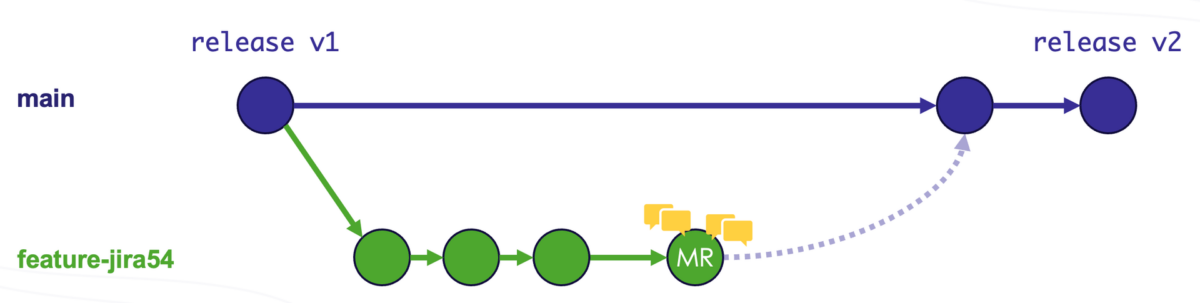

Each developer commits to a specific branch, often associated with a particular feature to develop. When the feature is ready, all the changes developed inside its branch must be integrated into the main branch of the project by a mechanism called a merge request.

The merge request is a virtual social space where the code developed by a developer is reviewed by its colleagues, a.k.a. reviewers.

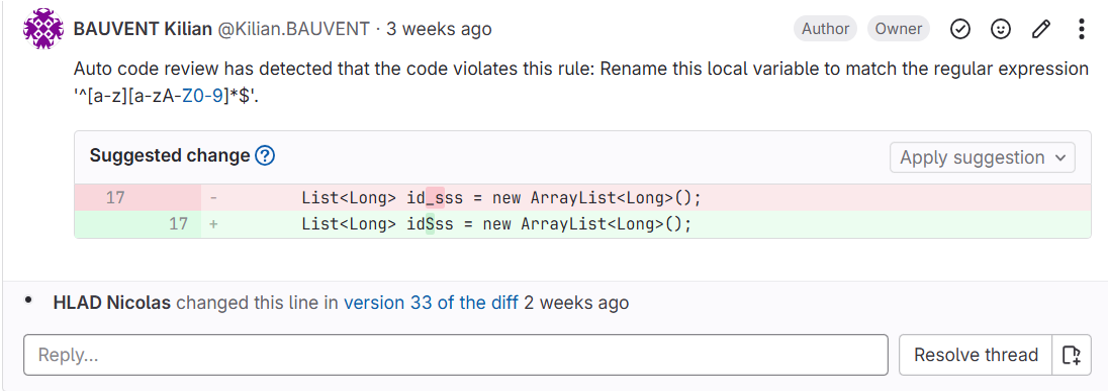

The reviewer ensures that 1) the code proposed by the developer meets its feature requirements and 2) that the code itself meets a certain quality standard (i.e., established code conventions, no vulnerabilities, no code smells, etc.). For the latter, the reviewer can post comments about the submitted code and even suggest changes with a suggestion.

However, reviewing a merge request takes time. In the previous diagram, we see that there is a feedback loop between the developers and the reviewers. Especially when the review has suggestions, she must communicate them to the developer and wait for them to be implemented in a new commit. Passing the requirement step, most of the suggestions are about code quality.

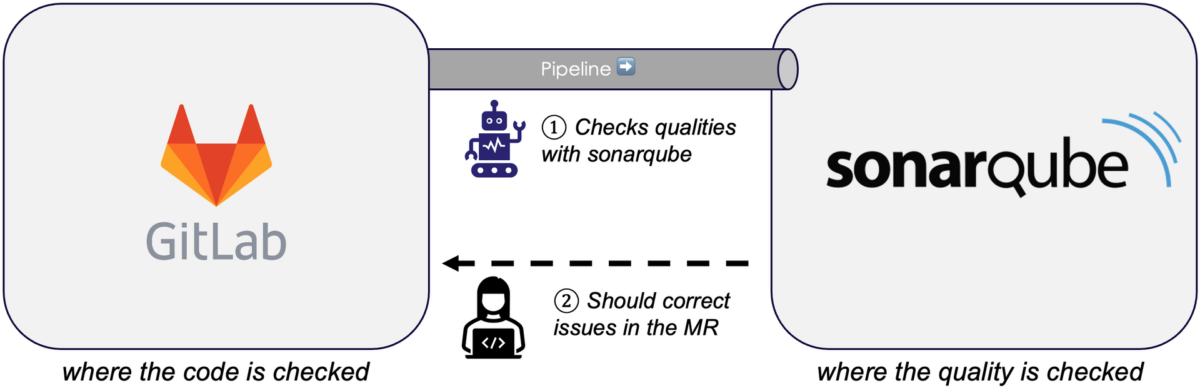

Fortunately, tools exist to automatically detect code smells or vulnerabilities. At Berger-Levrault, we apply SonarQube to that purpose. When a merge request is created, a Continuous Integration (CI) pipeline is automatically created by GitLab and sends the last code commits to be analyzed by SonarQube.

On the SonarQube platform, developers can see issues detected by SonarQube and other recommendations. Each issue is also mentioned with its estimated time to fix and impact on the code base (from low to critical).

So, a developer works on developing a feature, then sends her code to be reviewed with a merge request. This triggers an automatic pipeline that sends the code to SonarQube. SonarQube gives feedback so that she and her reviewer can fix the code quality if needed. With that process in place, it’s hard to imagine that there are any code issues left unfixed in our project’s code. Right?

Why are there so many issues unfixed on project’s code?

Here is the problem, we observed that despite the reviewing process and the automatic analyses with SonarQube, little to no quality feedback is applied to our project source code.

Let’s take teams from a major tool at Berger-Levrault, which we will call Anonyme. In Anonyme in 2024, SonarQube reported 43,000 issues, and we calculated that it represented over 1,162 days of technical debt.

Another thing to keep in mind: in 2024, Anonyme registered over 2,239 merge requests.

By conducting a survey among the top 5 reviewers of merge requests in 2024 from the Anonyme team, we found out they spend at least 1 hour per merge request; that each merge request involves at least 2 people, and finally that they review an average of 43 merge requests per week. By adding up those numbers, we calculate a workforce of over 75.25 hours per week and around 3,913 hours per year dedicated to the merge request reviewing process. Thus, the workforce of at least 2.5 full-time employees per year!

Our teams currently invest significant time in managing merge requests; however, they struggle to incorporate code-quality feedback from SonarQube effectively. Given the current workforce constraints, we believe that only an automated tool, seamlessly integrated into their existing environment, can address these challenges.

At the DRIT, we developed AutoCodeReview, a tool that automatically integrates the feedback from SonarQube and more as suggestions to the merge request reviews. Let us see how it works!

AutoCodeReview: Suggesting Fixes to code quality during merge requests.

Let us introduce AutoCodeReview and describe its integration into our current reviewing workflow.

AutoCodeReview is a bot, a program written in Pharo Smalltalk that runs in the CI/CD pipeline of any project. Whenever a merge request is created, it triggers an event that sets up the AutoCodeReview pipeline.

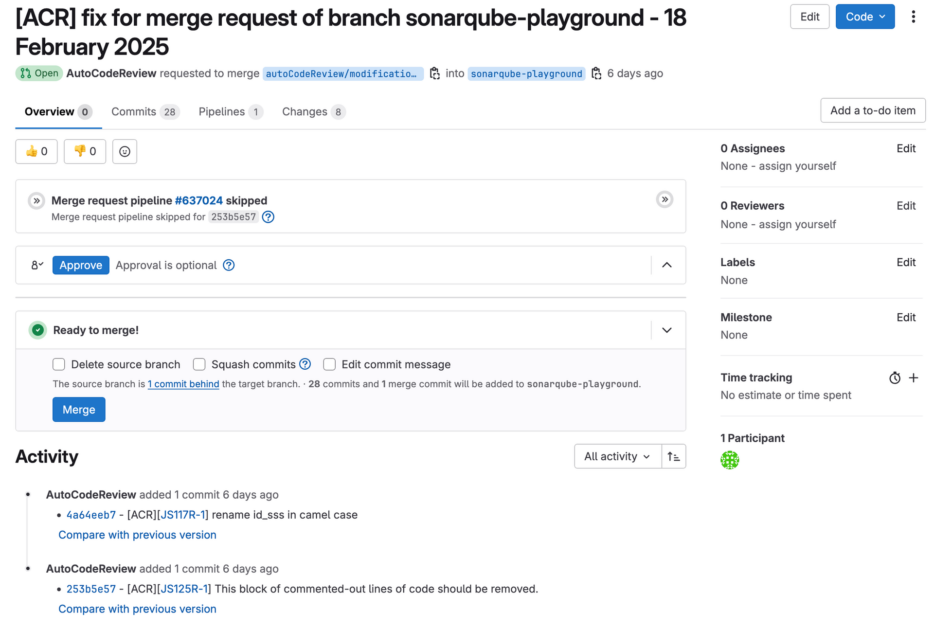

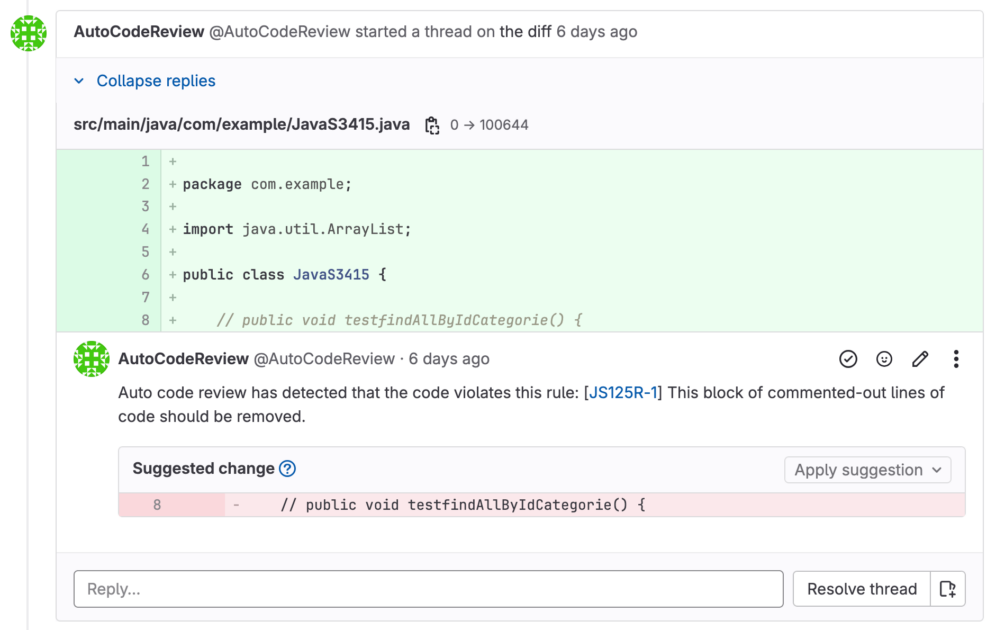

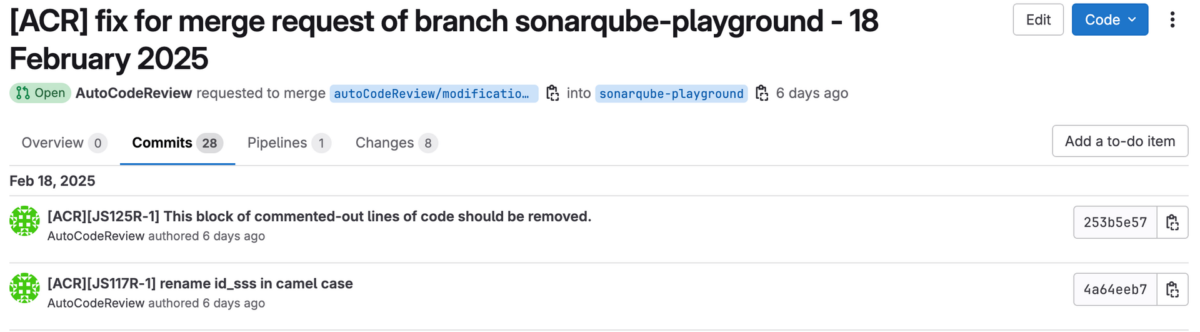

Its pipeline can also be launched manually over a project branch; in this case, AutoCodeReview will create its own merge request. However, the results of AutoCodeReview are always a set of fixes suggested in a merge request.

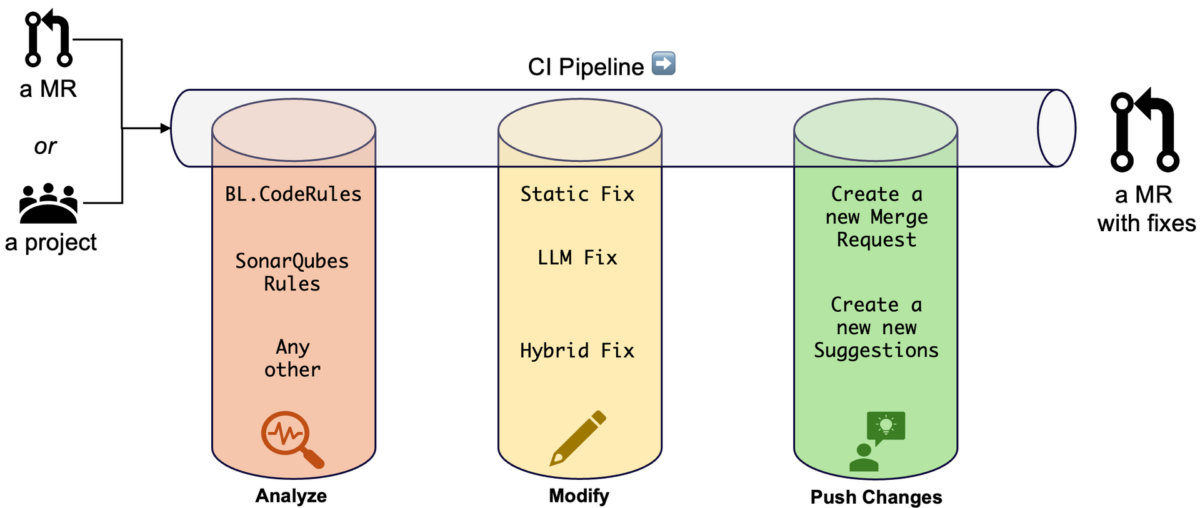

AutoCodeReview (AutoCodeReview) pipelines have essentially 3 jobs: analyze, modify, and push changes.

Analyze

The first step analyzes the code source to detect any violations of a specific set of rules. The analysis is conducted by parsing the source code and detecting certain patterns declared as rules. Each detection sets up a violation that leads to a fix.

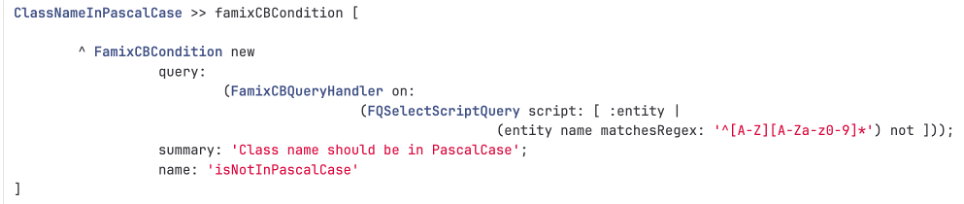

We define two sources to establish rules: a custom set of rules for Berger-Levrault, called BL.CodeRules, and the rules from Sonarqube. The system of rules is extensible to other sources for future improvement.

First, the set of BL.Rules is designed in Smalltalk Pharo. We see an example of the Pascal Case rule in the next picture.

Second, we use rules from SonarQube. From the SonarQube API, we gather all the issues as violations. However, SonarQube doesn’t provide fixes for the violations, only a text describing the issue and a recommendation on how to fix them. So once again, we use Pharo to set up a fix for each SonarQube rule.

Modify

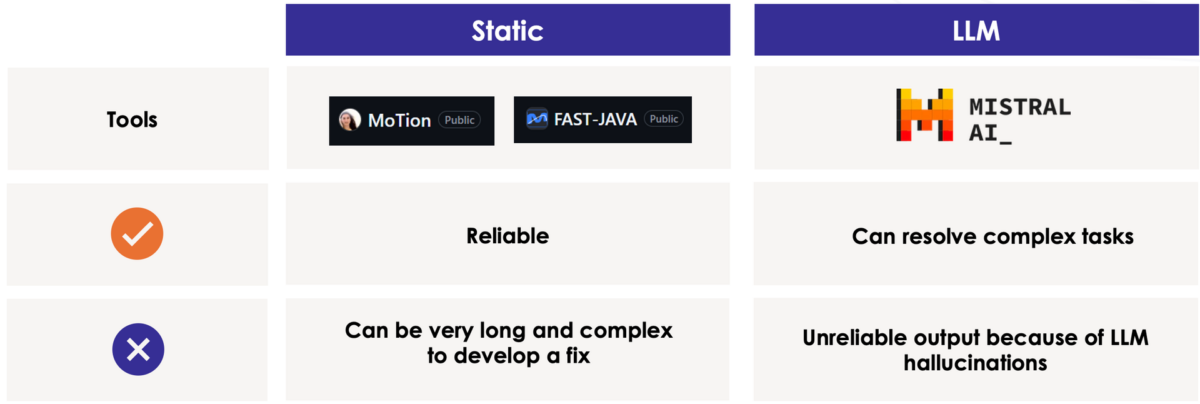

The modification applies a specific fix defined for each violation and returns the modified source code. Each modification is a proposal of changes rather than an effective change in the original code. The modifier can apply different types of fixes, depending on the rules. Currently, we have the static fix, the LLM fix, or the hybrid fix.

The static fix proposes a solution based on a pre-determined code update. For instance, if we detect that a variable is incorrectly named by violating a Camel Case rule, we can fix it with a regex rule that removes the symbol and turns the next letter in capital.

The LLM fix lets a generative AI model (a large language model like Mistral 7B) provide a solution. We prompt the LLM with the targeted snippet of code, a text explaining the violation, and the instruction to solve it. For instance, we designed a rule that made Java comments mandatory for each Java method. Thus, the LLM is structured to generate a Java comment based on the method’s body.

Finally, if needed, more complex rules could be solved using a hybrid solution that uses static and LLM fixes. But we have yet to define those.

Push changes

Once the modification is ready, it is pushed back to the Git platform to appear in a merge request.

First, if AutoCodeReview is triggered manually on a branch, the modifications are pushed all at once inside a new merge request. This way, the reviewers can review those changes before accepting or rejecting them.

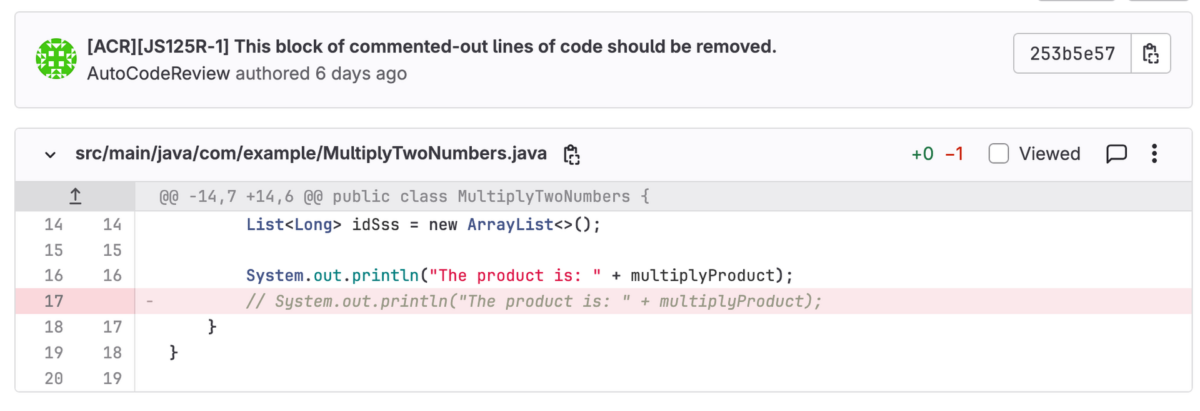

Second, if AutoCodeReview has been triggered by a new merge request, its modification will appear as suggestion. A suggestion only suggests code changes to the developers. The developer can then accept or reject it, and even discuss those changes with the reviewer.

When a suggestion is accepted, it produces a new follow-up commit that is added to the current merge request.

Conclusion

AutoCodeReview is a brand new tool, developed over the last 6 months at Berger-Levrault. It creates a pipeline that runs in parallel with the review process to enforce the correction of code quality issues. Those issues are detected using rules from BL.CodeRules, our custom set, or directly from SonarQube. Each issue is fixed with a static or an LLM-based approach.

When we apply AutoCodeReview to the pipelines of our Anonyme teams, we manage to solve 6138 issues in under 20 minutes (versus 120 hours manually).

We anticipate that AutoCodeReview will be deployed to minimize the occurrence of code smells within the codebase. Our next step is to investigate how AutoCodeReview impacts the effort of reviewing merge requests by measuring how many suggestions are accepted, modified, generated, etc.

For future work, we’ve observed that reviewers and developers tend to review large merge requests inside their IDE tool, thus losing the suggestions displayed inside their Git platform. We are already working on ReviewFixer, a VSCode plugin that displays the suggestions and comments of a merge request directly inside the IDE. But more on that in a future blog post!How to analyze the energy consumption of your software?