What is Augmented Reality, and how is it used for Augmented Maintenance?

Augmented Reality (AR) has emerged as a powerful tool for enhancing human-computer interaction, allowing digital content to be seamlessly integrated into the physical world. Unlike traditional desktop or mobile interfaces, AR enables spatially situated interactions, where virtual content is embedded within the user’s physical surroundings. Users can thus perceive and manipulate this content in relation to the spatial context of the real world. This allows virtual objects to be placed, manipulated, and experienced as if they were part of the user’s physical environment.

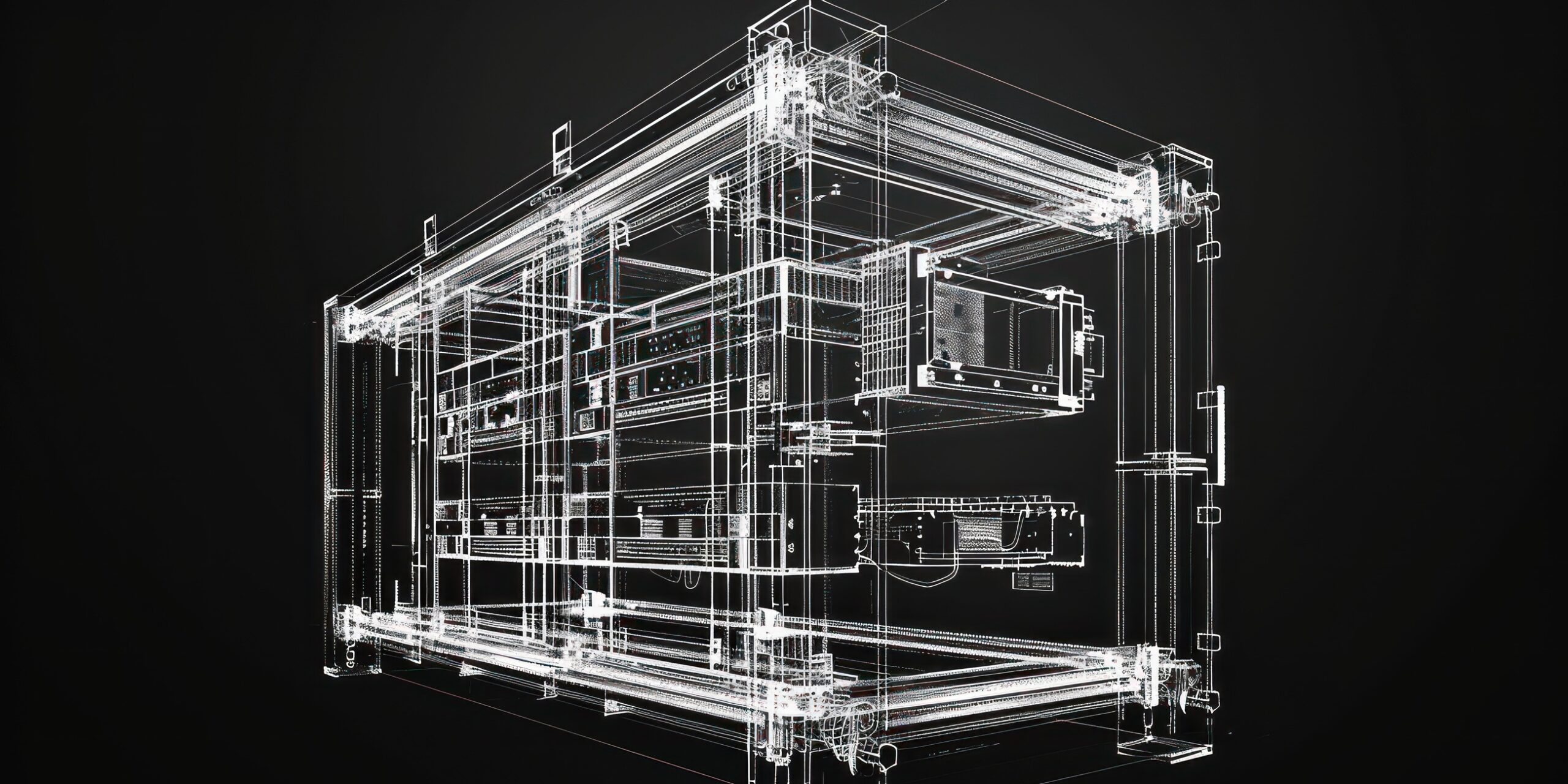

For example, in an industrial context, as illustrated in the picture above with the BL.MIXEDR system, AR instructions can be placed next to machines to help technicians during maintenance work by having access to useful information where they need it.

How to interact in Augmented Reality?

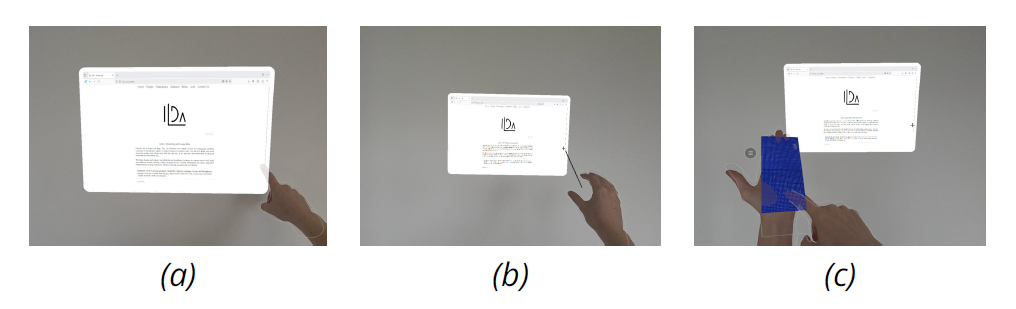

To interact in an Augmented Reality environment, users have different possibilities. Users typically interact directly with the virtual window by (a) touching it if it is within arm’s reach or (b) through a raycast. Techniques such as mid-air gestures or raycast-based input often require users to keep their arms raised at gaze level, causing fatigue and raising concerns about social acceptance.

Indirect interaction via touchpads offers an interesting alternative, allowing users to control a cursor displayed in AR by moving their finger on a pad located in an easy-to-reach zone (c), thus reducing fatigue. Such pads can be placed on top of the non-dominant hand to always be available and provide haptic feedback during interaction while keeping users free.

Our approach: Interaction with the non-dominant hand as support

In the field maintenance and industry, technicians wearing AR headsets could use their own hands to scroll through manuals, adjust parameters, or check To-Do lists while keeping both hands free for tools instead of having to grab and pocket a smartphone or tablet.

Conclusion

We conducted user experiments to evaluate the feasibility and performance of using the non-dominant hand as support for interaction in AR. Our results show that pads placed on the hand perform on par with pads placed on the air close to the hand, which are already widely adopted in AR UI design. We also quantify the trade-off of relying solely on hand tracking in comparison with high-fidelity tracking, such as smartphones. We show that there is still a performance in favor of the use of high-fidelity tracking in terms of speed and accuracy. However, this relatively small gap indicates that it is possible to use hand tracking only to implement on-hand pads today, particularly for discrete tasks such as button activations. In addition, when the smartphone is not already held in the hand, even simple interactions like button presses take longer to initiate, as users need to grab the device.

Virtual pads placed on top of users’ hands are a promising input method for AR. They allow users to interact in a comfortable posture, combining the haptic benefits of a physical surface with proprioceptive advantages, while also allowing the user to seamlessly integrate touchpad interactions with other virtual and physical object manipulations by keeping their hand free.

For more details, please check the full paper: https://dl.acm.org/doi/pdf/10.1145/3743707