I have been a member of the Berger-Levrault research team for 6 years. And, one of our main challenges is integrating our innovation into the day-to-day world of the company. There are several ways to incorporate innovation: as an external code library, providing schema, teaching how to use new tools, and so on. In this blog post, I will present how we integrate Software Engineering work into everyday developers’ tools.

Software Engineering is about helping people develop great software systems. In Software Engineering Research, we built tools that help migrate your application, or improve your software architecture. However, these kinds of innovations are not meant to be used every day. For instance, once the application is migrated, people do not reuse (for the same application) the migration tool.

Other innovations are about designing new visualizations or providing diagnostics about the code. These kinds of innovations are better when updated automatically with the code. Indeed, when using a map, people expect the map to be updated. The same applies to software schema or code critics. Another constraint for developers is that using these innovations must be as easy and integrated with their current tools as possible.

At Berger-Levrault, we use Moose to analyze software systems. Moose allows us to create visualization and diagnostics about the code. Today, we will present how one can produce a basic UML visualization of its code and integrate it into the GitLab wiki. Then, we present how to integrate Moose advanced diagnostics into the well-known SonarQube tool. Finally, we expose the integration in text editor and IDE such as VSCode.

Perform analysis in a CI

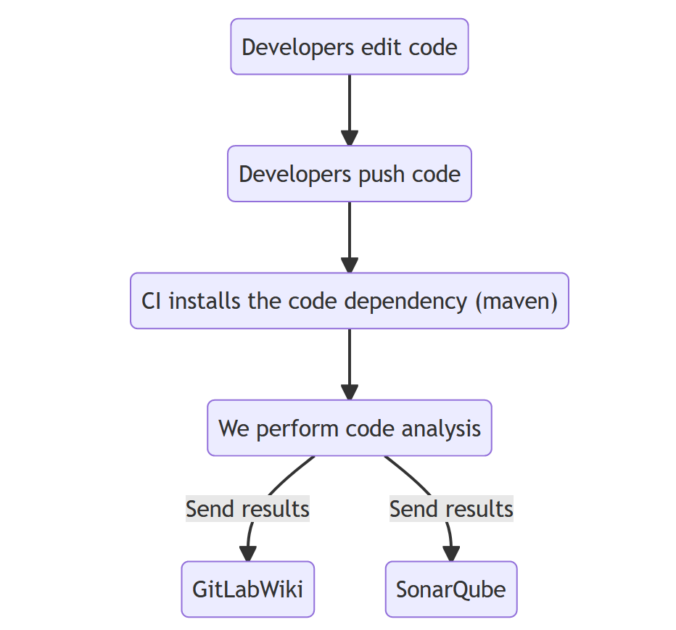

The first step to performing analysis when developers are working on the code is to use the CI to perform our analysis.

The main process is the following: developers edit code, push the code to a git repository, the CI installs the code dependencies, our tool analyzes the code, and new results are sent to developers’ tools.

Considering the GitLab CI, two preliminary steps are required to perform the analysis. The figure below presents the GitLab CI file .gitlab-ci.yml

stages:

- install

- parse

# Install the dependencies

install:

stage: install

image:

name: kaiwinter/docker-java8-maven

script:

# '../maven/settings - critics.xml' set the maven repository to './repo'

- mvn clean install --settings '../maven/settings - critics.xml' -Dmaven.test.skip=true

artifacts:

paths:

- repo

parse:

stage: parse

image:

name: ghcr.io/evref-bl/verveinej:v3.0.13

entrypoint: [""]

needs:

- job: install

artifacts: true

script:

- /VerveineJ-3.0.13/verveinej.sh -format json -o output.json -alllocals -anchor assoc -autocp ./repo .

artifacts:

paths:

- output.jsonThe step install consists in using Maven (in the case of Java code) to install de project dependency. This step is optional but helps get better results in future analysis.

The step parse uses VerveineJ, a tool that parses Java code and produces a model of the code that can be used for analysis.

Once we performed these preliminary steps, we have to ask the CI to run the analysis and send the results to the target platform.

Integrate visualizations to GitLab wiki

To integrate schema to GitLab wiki, there are two options: use native markdown schema, or export visualization as png and integrate the png file to markdown.

In this blog post, we focus on native markdown schema using MermaidJS. To use Mermaid with Moose, we use the MermaidPharo project. The configuration of the CI to integrate visualization into the GitLab wiki is done in three steps:

- We add a configuration file named

.smalltalk.ston. This file configures the CI to install the project MermaidPharo

SmalltalkCISpec {

#loading : [

SCIMetacelloLoadSpec {

#baseline : 'MermaidPharo',

#repository : 'github://badetitou/MermaidPharo:main',

#directory : 'src',

#platforms : [ #pharo ],

#onConflict : #useIncoming,

#load : [ 'moose' ],

#onUpgrade : #useIncoming

}

],

#testing: {

#failOnZeroTests : false

}

}- Add a Pharo script in

ci/executeCode.stthat will perform the analysis.

"=== Load MooseModel ==="

model := FamixJavaModel new.

'./output.json' asFileReference readStreamDo: [ : stream | model importFromJSONStream: stream ].

model rootFolder: '.'.

diagram := Famix2Mermaid new

model: model;

generateClassDiagram.

visitor := MeWritterVisitor new.

visitor endOfLine: String crlf.

"Export the diagram in the markdown file './wiki-export/mermaid.md'"

'./wiki-export/mermaid.md' asFileReference ensureCreateFile; writeStreamDo: [ :stream |

stream << '```mermaid'; << String crlf.

diagram exportWith: visitor to: stream.

stream << String crlf; << String crlf; << '```'; << String crlf.

].

Stdio stderr << '=== End Pharo ===' << String crlf.

Smalltalk snapshot: false andQuit: true- Finally, we update the

.gitlab-ci.ymlfile to execute the Moose analysis and update the GitLab wiki project

analyze:

stage: analyze

image: hpiswa/smalltalkci

variables:

ORIGIN_IMAGE_NAME: BLMoose

needs:

- job: parse

artifacts: true

script:

# Set up a Moose Image for the analysis

- apt-get install -y wget unzip

- "wget -O artifacts.zip --header "PRIVATE-TOKEN: $GILAB_TOKEN" "https://my.private.gitlab.com/api/v4/projects/219/jobs/artifacts/main/download?job=Moose64-10.0";

- unzip artifacts.zip

# Install Moose using .smalltalk.ston

- smalltalkci -s "Moose64-10" --image "$ORIGIN_IMAGE_NAME/$ORIGIN_IMAGE_NAME.image"

# Execute analysis code

- /root/smalltalkCI-master/_cache/vms/Moose64-10/pharo --headless BLMoose/BLMoose.image st ./ci/executeCode.st

# Set up and update the Wiki

- export WIKI_URL="${CI_SERVER_PROTOCOL}://username:${USER_TOKEN}@${CI_SERVER_HOST}:${CI_SERVER_PORT}/${CI_PROJECT_PATH}.wiki.git"

# Disable ssl check that might fail

- git config --global http.sslverify "false"

- rm -rf "/tmp/${CI_PROJECT_NAME}.wiki"

- git clone "${WIKI_URL}" /tmp/${CI_PROJECT_NAME}.wiki

- rm -rf "/tmp/${CI_PROJECT_NAME}.wiki/wiki-export"

- rm -rf "/tmp/${CI_PROJECT_NAME}.wiki/wiki-export"

- mv -f wiki-export /tmp/${CI_PROJECT_NAME}.wiki

- cd /tmp/${CI_PROJECT_NAME}.wiki

# set committer info

- git config user.name "$GITLAB_USER_NAME"

- git config user.email "$GITLAB_USER_EMAIL"

# commit the file

- git add -A

- git commit -m "Auto-updated file in CI"

# push the change back to the master branch of the wiki

- git push origin "HEAD:main"Push advanced diagnostics to SonarQube

Another possible integration is with SonarQube. Again, to perform the SonarQube integration, we have to modify .smalltalk.ston, ci/executeCode.st, and .gitlab-ci.yml. We also have to create a reports/rules/rules.ston file that contains the rules executed by Moose.

- For the

.smalltalk.ston, this time, we load the FamixCritics to SonarQube project

SmalltalkCISpec {

#loading : [

SCIMetacelloLoadSpec {

#baseline : 'FamixCriticSonarQubeExporter',

#repository : 'github://badetitou/Famix-Critic-SonarQube-Exporter:main',

#directory : 'src',

#platforms : [ #pharo ],

#onConflict : #useIncoming,

#onUpgrade : #useIncoming

}

],

#testing: {

#failOnZeroTests : false

}

}- For the

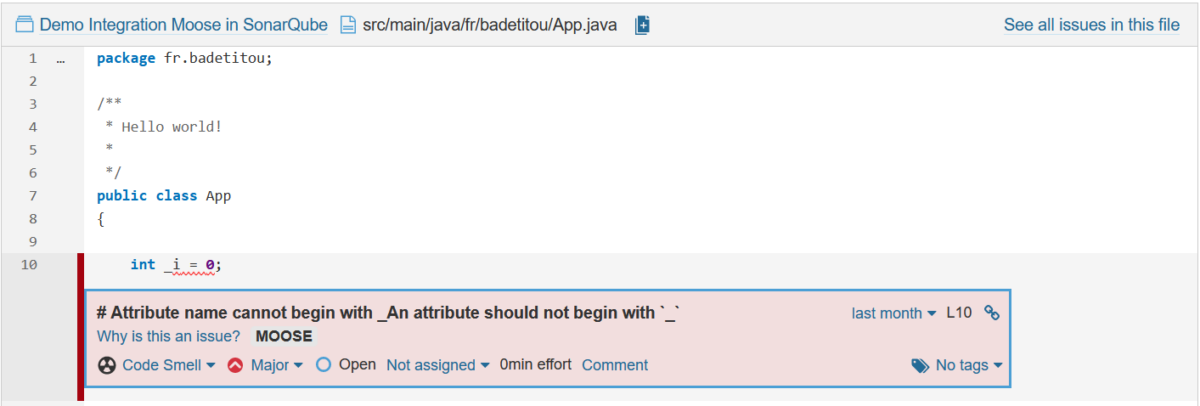

reports/rules/rules.ston, it includes the rules that Moose will execute when analyzing the code. Creating this file is made easy by the Critics browser of Moose. The example below presents one rule that checks that Java attributes do not begin with_.

FamixCBContext {

#contextBlock : '[ :collection | \r\t collection select: [ :el | el isKindOf: FamixJavaAttribute ] ]',

#summary : '',

#name : 'FamixJavaAttribute';

}FamixCBCondition {

#query : '[ :entity | entity name beginsWith: \'_\' ]',

#summary : '# Attribute name cannot begin with _\r\rAn attribute should not begin with `_`',

#name : 'Attribute name cannot begin with _';

}OrderedCollection [

0,

1

]- For the

ci/executeCode.st, we ask Moose to load the previously defined rules, we execute them, and we generate the SonarQube compatible file from the results

"=== Load MooseModel ==="

model := FamixJavaModel new.

'./output.json' asFileReference readStreamDo: [ : stream | model importFromJSONStream: stream ].

model rootFolder: '.'.

"Run rules"

criticBrowser := MiCriticBrowser on: MiCriticBrowser newModel.

'./reports/rules/rules.ston' asFileReference readStreamDo: [ :stream | criticBrowser importRulesFromStream: stream ].

criticBrowser model setEntities: model.

criticBrowser model run.

violations := criticBrowser model getAllViolations.

"Export critics to file './reports/sonarGenericIssue.json' asFileReference"

targetRef := './reports/sonarGenericIssue.json' asFileReference.

FmxCBSQExporter new

violations: violations;

targetFileReference: targetRef;

export.- Finally, we update the

.gitlab-ci.ymlto execute the analysis and send the results to SonarQube

analyze:

stage: analyze

image: hpiswa/smalltalkci

variables:

ORIGIN_IMAGE_NAME: BLMoose

needs:

- job: parse

artifacts: true

script:

# Set up a Moose Image for the analysis

- apt-get install -y wget unzip

- 'wget -O artifacts.zip --header "PRIVATE-TOKEN: $GILAB_TOKEN" "https://my.private.gitlab.com/api/v4/projects/219/jobs/artifacts/main/download?job=Moose64-10.0"'

- unzip artifacts.zip

# Install Moose using .smalltalk.ston

- smalltalkci -s "Moose64-10" --image "$ORIGIN_IMAGE_NAME/$ORIGIN_IMAGE_NAME.image"

# Execute analysis code

- /root/smalltalkCI-master/_cache/vms/Moose64-10/pharo --headless BLMoose/BLMoose.image st ./ci/executeCode.st

artifacts:

paths:

- reports/sonarGenericIssue.json

expire_in: 1 week

sonar:

stage: sonar

image: kaiwinter/docker-java8-maven

needs:

- job: analyze

artifacts: true

variables:

MAVEN_CLI_OPTS: '--batch-mode -Xmx10g -XX:MaxPermSize=10g'

SONAR_URL: 'https://my.private.gitlab.com'

script:

- mvn clean install sonar:sonar -Dsonar.projectKey=$PROJECT_KEY -Dsonar.host.url=$SONAR_URL -Dsonar.login=$MOOSE_SONAR_TOKEN -Dsonar.externalIssuesReportPaths=../reports/sonarGenericIssue.json --settings '../maven/settings - critics.xml' -Dmaven.test.skip=trueCritics are then available using the SonarQube interface.

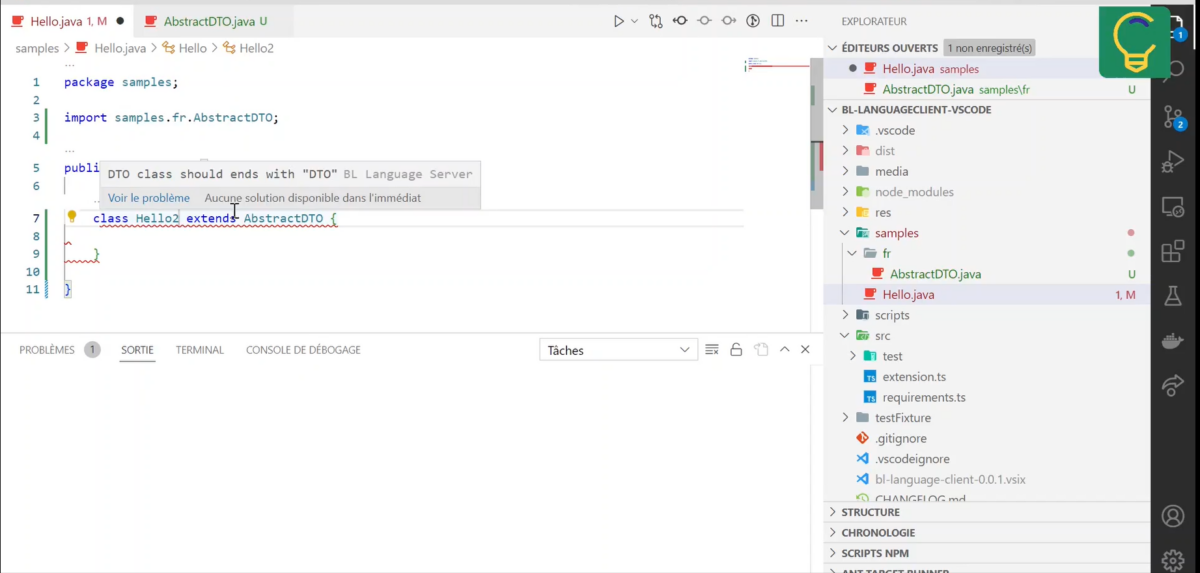

Integrate with VSCode

The last possible great integration is the integration of Critics (see section with SonarQube) directly inside VSCode. This approach is similar to the one of SonarLint.

To achieve such an integration, we also use Moose and Pharo, and especially, we use the Language Server Protocol and its implementation for Pharo (badetitou.pharo-language-server). We extended it to compute the same critics as our CI. And it directly shows the critics inside developers’ environment.